Table of contents

Introduction:

AWS Storage Services provide the cloud storage service model for storing data online, this eliminates the upfront cost, expansion cost for the data center and so on. Instead this Pay-As-You-Go model is the best for on-demand capacity expansion and reduction where you will only pay the price for the total duration used.

Data availability, and durability at a very low cost and that too at AWS end providing the Disaster Recovery, Data Archiving and Backup solution.

Types of AWS Storage Services

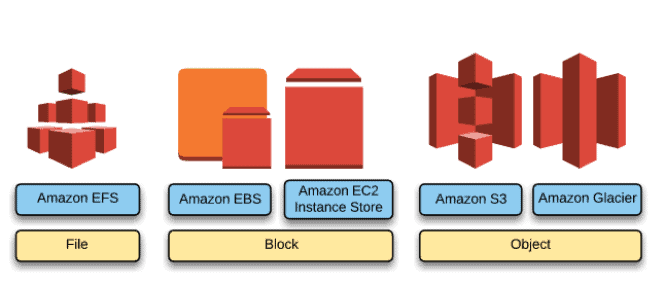

AWS Cloud Storage is broadly categorized into three types:

Amazon Block Storage

Amazon File Storage

Amazon Object Storage

Amazon Block Storage

Amazon EBS (Elastic Block Storage) is a service designed for Amazon EC2 (Elastic Compute Cloud). Think of them as a detachable storage drive that will be attached to the Amazon EC2 instance, post the attachment File System can be created over these volumes to run databases or Applications. Can also be detached and attached to other EC2 instances if needed. This type of storage is all about performance. It essentially functions as a hard disc connected to whatever is using it.

Features of Amazon EBS

Easy to use, high performance and scalable block storage service.

This allows you to create volumes and attach them to the Amazon EC2 instances as they are Persistent meaning, even if the EC2 Instance is terminated the data in the EBS volume will be intact.

They are often placed in a specific Availability Zone where they will be automatically replicated to create high availability and offers protection against failure of a single component.

One of the features of EBS is Elastic Volume which allows us to dynamically increase the capacity, change the type of live volume and tune performance with no downtime or any impact on the performance.

Depending upon the Performance and Cost, Amazon EBS is divided broadly into two categories: SSD-backed storage and HDD-backed storage.

Solid State Drives (SSD) -backed storage

Volume Type | EBS Provisioned IOPS SSD (io2 Block Express) | EBS Provisioned IOPS SSD (io2) | EBS Provisioned IOPS SSD (io1) | EBS General Purpose SSD (gp3) | EBS General Purpose SSD (gp2) |

Short Description | Highest performance SSD volume designed for business-critical latency-sensitive transactional workloads | Highest performance and highest durability SSD volume designed for latency-sensitive transactional workloads | Highest performance SSD volume designed for latency-sensitive transactional workloads | Lowest cost SSD volume that balances price performance for a wide variety of transactional workloads | General Purpose SSD volume that balances price performance for a wide variety of transactional workloads |

Durability | 99.999% | 99.999% | 99.8% - 99.9% durability | 99.8% - 99.9% durability | 99.8% - 99.9% durability |

Use Cases | Largest, most I/O intensive, mission critical deployments of NoSQL and relational databases such as Oracle, SAP HANA, Microsoft SQL Server, and SAS Analytics | I/O-intensive NoSQL and relational databases | I/O-intensive NoSQL and relational databases | Virtual desktops, medium sized single instance databases such as Microsoft SQL Server and Oracle, latency sensitive interactive applications, boot volumes, and dev/test environments | Virtual desktops, medium sized single instance databases such as Microsoft SQL Server and Oracle, latency sensitive interactive applications, boot volumes, and dev/test environments |

API Name | io2 | io2 | io1 | gp3 | gp2 |

Volume Size | 4 GB – 64 TB | 4 GB – 16 TB | 4 GB - 16 TB | 1 GB - 16 TB | 1 GB - 16 TB |

Max IOPS/Volume | 256,000 | 64,000 | 64,000 | 16,000 | 16,000 |

Max Throughput/Volume | 4,000 MB/s | 1,000 MB/s | 1,000 MB/s | 1,000 MB/s | 250 MB/s |

Max IOPS/Instance | 350,000 | 160,000 | 350,000 | 260,000 | 260,000 |

Max Throughput/Instance | 10,000 MB/s | 4,750 MB/s | 10,000 MB/s | 10,000 MB/s | 7,500 MB/s |

Latency | sub-millisecond | single digit millisecond | single digit millisecond | single digit millisecond | single digit millisecond |

Price | $0.125/GB-month | $0.125/GB-month | $0.08/GB-month | $0.10/GB-month | |

Dominant Performance Attribute | IOPS, throughput, latency, capacity, and volume durability | IOPS and volume durability | IOPS | IOPS | IOPS |

**Note: This data is the latest and all credit goes to the AWS Official documentation.*Default volume type

**Not currently supported by the EC2 instances which support io2 Block Express

***Volume throughput is calculated as MB = 1024^2 bytes

EBS Provisioned IOPS SSD:

This includes the Provisioned IOPS SSD volumes (io2 Block Express, io2 & io1) which are IOPS Intensive.

This is designed for IO-intensive and latency-sensitive transactional workloads with a maximum of 256,000 IOPS, 4,000 MB/s of throughput, and 64 TiB in size per volume.

Among these, the latest generation is io2 Block Express which delivers more throughput and IOPS than the regular io2 volume within the same price but with lesser sub-millisecond latency.

io2 Block Express is ideal for all mission-critical use cases like Oracle, Microsoft SQL Server, SAP HANA, and SAS Analytics with a durability of 99.999%.

io2 Block Express is available in all Regions where supported instances are available. Instance availability might vary by Availability Zone.

io1 volumes, io2 and io2 Block Express volumes support multi-attach which allows customers to attach an io2 volume to up to sixteen Nitro-based EC2 instances within the same Availability Zone.

General purpose SSD (gp3 and gp2) volumes

General Purpose SSD volume that balances price performance for a wide variety of transactional workloads.

They provide a durability of 99.8% - 99.9% durability with single-digit millisecond latency which is ideal for Virtual desktops, medium-sized single instance databases such as Microsoft SQL Server and Oracle, latency-sensitive interactive applications, boot volumes, and dev/test environments.

Amazon gp3 volumes are the latest generation of general-purpose SSD-based EBS volumes that enable customers to provision performance independent of storage capacity while providing up to 20% lower pricing per GB than existing gp2 volumes.

Hard Disk Drives (HDD)

Volume Type | Throughput Optimized HDD (st1) | Cold HDD (sc1) |

Short Description | Low-cost HDD volume designed for frequently accessed, throughput-intensive workloads | Lowest-cost HDD volume designed for less frequently accessed workloads |

Durability | 99.8% - 99.9% durability | 99.8% - 99.9% durability |

Use Cases | Big data, data warehouses, log processing | Colder data requiring fewer scans per day |

API Name | st1 | sc1 |

Volume Size | 125 GB - 16 TB | 125 GB - 16 TB |

Max IOPS/Volume | 500 | 250 |

Max Throughput*/Volume | 500 MB/s | 250 MB/s |

Max Throughput/Instance | 10,000 MB/s | 7,500 MB/s |

Price | $0.045/GB-month | $0.015/GB-month |

Dominant Performance Attribute | MB/s | MB/s |

Note: The above data in the table, is the latest and all credit goes to the AWS Official documentation.

Looking for EBS Magnetic? See the Previous Generation Volumes page.

* st1/sc1 based on 1 MB I/O size

** Volume throughput is calculated as MB = 1024^2 bytes

HDD-backed volumes (MB/s-intensive)

The Throughput Optimized HDD volumes(st1) are ideal for frequently accessed, throughput-intensive workloads with large datasets and large I/O sizes making it perfect for data warehouses, ETL workloads etc.

This storage type is suitable for Big Data chunks and slower processing. These volumes cannot be used as root volumes for EC2

The Cold HDD Volumes(sc1) are ideal for less frequently accessed workloads with cold and large datasets. This is also among the cheapest as compared to other EBS Volume types.

Both st1 and sc1 deliver the expected throughput performance 99% of the time and have enough I/O credits to support a full-volume scan at the burst rate.

Amazon EBS Elastic Volumes

One of the features of EBS is Elastic Volume which allows us to dynamically increase the capacity, change the type of live volume and tune performance with no downtime or any impact on the performance.

There will be no additional cost in modifying its configuration but you will be charged for the new volume configuration after volume modification starts.

Instance Store

This is the one that is physically attached to the server, thus providing very high random I/O performance.

It is also, referred to as Ephermal/temporary block-level storage meaning, data inside this will be lost in below cases:

The underlying disk drive fails.

instance stops i.e. if the EBS-backed instance with instance store volumes attached is stopped.

Instance termination.

But data persists in case of reboot

This will be attached at the time of provisioning and cannot be dynamically resized.

Ideal for temporary storage where data changes more frequently like cache, buffers etc.

EBS Encryption

All data stored at rest, disk I/O, and snapshots created from the volume will be encrypted.

It uses 256-bit AES algorithms (AES-256) and an Amazon-managed KMS.

Snapshots of an encrypted EBS volume will be encrypted automatically.

EBS Snapshot

EBS Snapshots are incremental to help create backups of EBS volumes.

It occurs asynchronously and consumes the instance IOPS.

EBS Snapshots are regional and cannot span across regions.

EBS Snapshots can be copied across regions to make it easier to leverage multiple regions for geographical expansion, data center migration, and disaster recovery.

EBS Snapshots can be shared by making them public or with specific AWS accounts by modifying the access permissions of the snapshots.

EBS Snapshots support EBS encryption:

Snapshots of an encrypted EBS volume will be encrypted automatically.

Volumes created from encrypted snapshots will be encrypted automatically.

All data in flight between the instance and the volume is encrypted.

Volumes created from an unencrypted snapshot owned or have access to can be encrypted on the fly.

Encrypted snapshot owned or having access to, can be encrypted with a different key during the copy process.

EBS Snapshots can be automated using AWS Data Lifecycle Manager.

Amazon File Storage

EFS(Elastic File Storage) provides a network file system as-a-service that is Scalable, Elastic, Highly Available, and Durable.

EFS stores data redundantly across multiple Availability Zones. It grows and shrinks automatically as files are added and removed, so there is no need to manage storage procurement or provisioning.

EFS has an elastic storage capacity that can automatically expand and contract based on demand. EFS is compatible with NFSv4 and NFSv4.1, the Network File System versions.

Data is stored by EFS as objects, and each object is spread across several regions’ availability zones. longer-lasting than S3. The capacity to call APIs.

EFS is a fully managed, easy-to-set-up, scale, and cost-optimized file storage.

EFS can automatically scale from gigabytes(GB) to petabytes(PB) of data without needing to provision storage.

EFS provides managed NFS (network file system) that can be mounted on and accessed by multiple EC2 in multiple AZs simultaneously.

It is expensive (3x gp2), but you pay per use.

It is compatible with all Linux-based AMIs for EC2, POSIX file system (~Linux) that has a standard file API.

EFS does not support Windows AMI.

EFS offers the ability to encrypt data at rest using KMS and in transit.

EFS can be accessed from on-premises using an AWS Direct Connect or AWS VPN connection between the on-premises data center and VPC.

EFS can be accessed concurrently from servers in the on-premises data center as well as EC2 instances in the Amazon VPC

Performance mode

General purpose (default)

- latency-sensitive use cases (web server, CMS, etc…)

Max I/O

- higher latency, throughput, highly parallel (big data, media processing)

Storage Tiers

Standard

for frequently accessed files

ideal for active file system workloads and you pay only for the file system storage you use per month

Infrequent access (EFS-IA)

A lower-cost storage class that’s cost-optimized for files infrequently accessed i.e. not accessed every day.

Cost to retrieve files, lower price to store.

EFS Lifecycle Management with choosing an age-off policy allows moving files to EFS IA

Lifecycle Management automatically moves the data to the EFS IA storage class according to the lifecycle policy. for e.g., you can move files automatically into EFS IA after 14days if data is not being accessed.

EFS is a shared POSIX system for Linux systems and does not work for Windows.

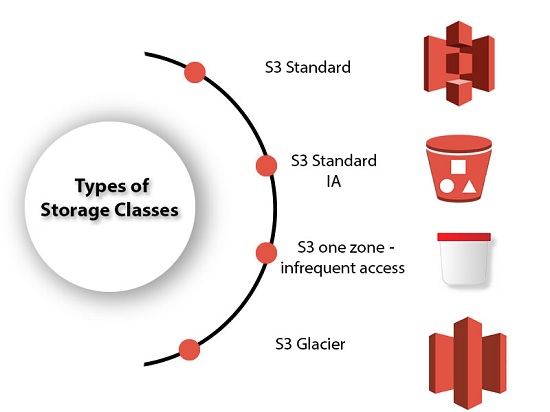

Amazon Object Storage

The enormous scalability and metadata capabilities of object storage are frequently tapped into by cloud-based applications. Simple Storage Service (Amazon S3) and Amazon Glacier are excellent object storage options for building modern applications from the ground up that require scale and adaptability. These solutions can also be used to ingest existing data stores for analytics, backup, and archiving purposes.

Amazon Simple Storage Service

Amazon’s S3 oldest and best-supported storage infrastructure, Amazon S3, has an object storage paradigm that can store and retrieve data of any size. Websites, mobile apps, business applications, and data from IoT sensors or other devices that may be dumped onto S3 are just a few examples of places where data can be retrieved.

With support for high bandwidth and demand, S3 has been extensively used to host web content. JavaScript-based static websites can be saved in S3 because scripts can also be stored there. With the help of the lifecycle management rule for S3-stored data, it facilitates the migration of data to Amazon Glacial for cold storage.

S3 supports data at rest and data in transit encryption

S3 supports three different types of encryption, including client-side and server-side encryption.

Server-Side Encryption

SSE-S3 – encrypts S3 objects using keys handled & managed by AWS

SSE-KMS – leverage AWS Key Management Service to manage encryption keys. KMS provides control and audit trail over the keys.

SSE-C – when you want to manage your own encryption keys. AWS does not store the encryption key. Requires HTTPS.

Client-Side Encryption

Client library such as the S3 Encryption Client

Clients must encrypt data themselves before sending it to S3

Clients must decrypt data themselves when retrieving from S3

Customer fully manages the keys and encryption cycle

Other users or AWS accounts can only access data in S3 if the admin has written an access policy granting them access. Another layer of protection can be added for object operation with the support of Multi-Factor Authentication (MFA).S3 supports a variety of compliance and security standards.

S3 uses parallel threads and Multipart upload for faster writes

S3 uses parallel threads and Range Header GET for faster reads

For list operations with a large number of objects, it’s better to build a secondary index in DynamoDB

It uses Versioning to protect from unintended overwrites and deletions, but this does not protect against bucket deletion

It uses VPC S3 Endpoints with VPC to transfer data using Amazon's internal network

Amazon Glacier

Amazon Glacier offers a safe, dependable, and incredibly affordable storage option. You can use Glacier to do robust analytics on archived data. For better and more efficient outcomes, the Glacier can also make use of other AWS storage services like S3, CloudFront, etc. to transport data in and out easily.

Archives of data are kept on Amazon Glacier. A single file may make up an archive, or an archive may combine multiple files. Archives are arranged in vaults and offer the ability to query data to find the specific subset of data you require from an archive.

Durability must be given top attention because AWS Glacier is an archiving service. For archives, the glacier is intended to offer average annual durability of 99.999999999%. Within an AWS Region, data is automatically spread across a minimum of three physically isolated facilities

Thanks for reading till the end, I hope you got some knowledge. Here is a BONUS to make you smile #Cloud_Memes:

If you like my work, Let's connect and collaborate😃. I am available on the below platforms and very much active there: